ORA-00164: distributed autonomous transaction disallowed within migratable distributed transaction / ORA-24777: use of non-migratable database link not allowed

Situation

A BPEL process used an XA datasource with Global transaction support to call a PL/SQL function. This function used a private database link to insert data in a local table. The following exceptions occurred;

ORA-24777: use of non-migratable database link not allowed

when using pragma autonomous transaction in the function;

ORA-00164: distributed autonomous transaction disallowed within migratable distributed transaction

Splitting the fetching of data from the view and the inserting in the local table by using collections, did not help. Also adding commit statements made no difference.

Solution

The best solution is not to use an XA datasource. Other solutions include using a shared public database link or setting the database server to MTS (this is not advisable!).

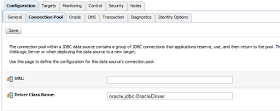

Below illustrates how to configure a datasource not to support distributed transactions.

In the JDBC datasource configuration, Configuration, Connection pool, use the following driver for Oracle databases; oracle.jdbc.OracleDriver.

Under Configuration, transactions; disable Supports Global Transactions.

Under the DbAdapter configuration, Outbound Connection Pools, (specific) connection factory; make sure under properties that the dataSourceName is filled and not xADataSourceName.

Under transaction support specify; No transaction

I had to restart the domain for the changes to become effective.

Exception occured when binding was invoked

Situation

After adding a ConnectionFactory to the DbAdapter, I notived the JNDI name was incorrect. I changed the JNDI name. I made sure a DataSource was created and specified by the ConnectionFactory. After using a BPEL process to connect to the database (using the ConnectionFactory specified in the DbAdapter), I got the following error.

Error

<bindingFault>

<part name="summary">

<summary>Exception occured when binding was invoked. Exception occured during invocation of JCA binding: "JCA Binding execute of Reference operation 'censored_DB' failed due to: Could not create/access the TopLink Session. This session is used to connect to the datastore. Caused by javax.resource.spi.InvalidPropertyException: Missing Property Exception. Missing Property: [ConnectionFactory> xADataSourceName or dataSourceName]. You may have set a property (in _db.jca) which requires another property to be set also. Make sure the property is set in the interaction (activation) spec by editing its definition in _db.jca. . You may need to configure the connection settings in the deployment descriptor (i.e. DbAdapter.rar#META-INF/weblogic-ra.xml) and restart the server. This exception is considered not retriable, likely due to a modelling mistake. ". The invoked JCA adapter raised a resource exception. Please examine the above error message carefully to determine a resolution. </summary>

</part>

<part name="detail">

<detail>Missing Property Exception. Missing Property: [ConnectionFactory> xADataSourceName or dataSourceName]. You may have set a property (in _db.jca) which requires another property to be set also. Make sure the property is set in the interaction (activation) spec by editing its definition in _db.jca. </detail>

</part>

<part name="code">

<code>null</code>

</part>

</bindingFault>

Solution

I had not specified any unusual properties in the .jca files. At first I of course checked I had correctly specified the DataSource in the connectionfactory. I restarted the JDBC connection pool and updated the DbAdapter. This did not help. Recreating the ConnectionFactory however, solved my issue.

Articles containing tips, tricks and nice to knows related to IT stuff I find interesting. Also serves as online memory.

Friday, February 24, 2012

Thursday, February 23, 2012

Changing properties of a BPEL process at runtime

Often it is useful to be able to set properties influencing behavior of a BPEL process after it has been deployed. Of course, a danger with changing settings at runtime is that they also need to be applied to the development branche in order to avoid the changes to be overwritten at redeployment of a process. Oracle provides several mechanisms of changing settings of a BPEL process at runtime. I will illustrate two of them below.

Polling for files

Suppose a path on the filesystem which is polled for files, changes. The File Adapter configuration wizard provides the option to use a logical name to specify a path;

This logical name can be set in the composite.xml;

During runtime this logical name can be changed;

This example is also explained in the SOA Suite manual and is pretty straightforward. The File Adapter properties screen displays the logical names and allows them to be changed. However, what if you want to use preferences not linked to a specific adapter service? The below example illustrates how that can be accomplished.

Other BPEL preferences

Below is based on; http://eelzinga.wordpress.com/2009/10/28/oracle-soa-suite-11g-setting-and-getting-preferences/

You can add preferences to the composite.xml file as shown below. The property name should start with bpel.preference

In the BPEL process you can use the preference with the ora:getPreference XPath function.

Then you can change the setting with the MBean browser by going to;

Application Defined MBeans -> oracle.soa.config -> Server : soa_server1 -> SCAComposite -> name project -> SCAComposite.SCAComponent -> name process. This is illustrated below.

Do keep in mind that the settings are reset to default once the applicationserver is restarted if you don't take additional action. See; http://beatechnologies.wordpress.com/tag/persist-the-values-of-preferences-in-bpel/

Using the MDS

One of the nice new features of Oracle SOA Suite 11g is the MDS (Metadata Services). The MDS is a unified store for metadata. When for example a BPEL process is deployed, it's put in the MDS. This store can also be used among other things to share common artifacts such as XSD's, EDL (Event Definition Language) and WSDL files. The MDS provides a more secure, better maintainable alternative to putting these artifacts on (for example) a webserver. JDeveloper also supports browsing the MDS directly and the MDS can be imported and exported from the Enterprise Manager.

Updating the MDS however on a per application basis, can not be done directly from JDeveloper without some additional scripts.

Because Ant scripts to make working with the MDS easier, are already available, I will not repeat those sources and just point out what I needed to do to make those examples work with my installation of JDeveloper 11.1.1.5. Edwin Biemond has provided a base script on http://biemond.blogspot.com/2009/11/soa-suite-11g-mds-deploy-and-removal.html. A tutorial describing an implementation of scripts similar to the base example, can be found on; http://technology.amis.nl/blog/15295/using-the-metadata-services-in-a-soa-environment-part-1

To make the script from the tutorial work from JDeveloper I had to do two things;

- download ant-contrib-1.0b3.jar from http://sourceforge.net/projects/ant-contrib/files/

- create a libs directory and add the folowing lines to the build.xml file;

<taskdef resource="net/sf/antcontrib/antcontrib.properties">

<classpath>

<pathelement location="../libs/ant-contrib-1.0b3.jar" />

</classpath>

</taskdef>

For reference you can download my test project. I've removed the servername, user, password in the env.dev.properties. http://dl.dropbox.com/u/6693935/mds-store.zip

Updating the MDS however on a per application basis, can not be done directly from JDeveloper without some additional scripts.

Because Ant scripts to make working with the MDS easier, are already available, I will not repeat those sources and just point out what I needed to do to make those examples work with my installation of JDeveloper 11.1.1.5. Edwin Biemond has provided a base script on http://biemond.blogspot.com/2009/11/soa-suite-11g-mds-deploy-and-removal.html. A tutorial describing an implementation of scripts similar to the base example, can be found on; http://technology.amis.nl/blog/15295/using-the-metadata-services-in-a-soa-environment-part-1

To make the script from the tutorial work from JDeveloper I had to do two things;

- download ant-contrib-1.0b3.jar from http://sourceforge.net/projects/ant-contrib/files/

- create a libs directory and add the folowing lines to the build.xml file;

<taskdef resource="net/sf/antcontrib/antcontrib.properties">

<classpath>

<pathelement location="../libs/ant-contrib-1.0b3.jar" />

</classpath>

</taskdef>

For reference you can download my test project. I've removed the servername, user, password in the env.dev.properties. http://dl.dropbox.com/u/6693935/mds-store.zip

Friday, February 17, 2012

Scheduling BPEL processes

Introduction

A BPEL process needs a trigger to be started. This trigger can be the result of polling of one of the JCA adapters. The trigger can also be external such as for example a service call.

A BPEL process needs a trigger to be started. This trigger can be the result of polling of one of the JCA adapters. The trigger can also be external such as for example a service call.

Usually the trigger is a database event which for example can be a change in a table or a direct call using a database table trigger and the UTL_HTTP package.

Using UTL_HTTP from a database trigger to directly call a BPEL process, requires the endpoint of the BPEL process to be known in the database and configuration of the ACL (access control list). This however, can be difficult to implement and maintain if there are many (database and application server) environments.

The customer

Sometimes, it is difficult to obtain a trigger from the database. This is the case if for example changes in a table can not be monitored directly by using a polling databaseadapter. If the customer uses Updateable views (see http://psoug.org/reference/views.html on Updateable views) BPEL can use them, but not all views are updateable. Of course, a separate audittable or file can be used for that, however this requires additional changes out of scope for SCA deployment.

Sometimes, it is difficult to obtain a trigger from the database. This is the case if for example changes in a table can not be monitored directly by using a polling databaseadapter. If the customer uses Updateable views (see http://psoug.org/reference/views.html on Updateable views) BPEL can use them, but not all views are updateable. Of course, a separate audittable or file can be used for that, however this requires additional changes out of scope for SCA deployment.

Other options based among others suggested by Lucas Jellema on

were tried. Using the database adapter to poll for a function result from dual to check if processing was required, was not easily implemented since the database adapter wizard requires a mechanism to record processed records. Disclaimer; I spend little time on experimenting with this. Also I didn't like the idea of using a BPEL process to loop and using the BPEL While and Wait activities to provide scheduling, since this process also needs to be started (chicken/egg story) and the processflow would become enormous if for example it would run for several years every few seconds. Such a flow could cause, amongst other things, memory problems if opened in a webbrowser for viewing.

Quartz

A Java programmer suggested using the open source Java scheduler Quartz for scheduling; http://terracotta.org/downloads/quartz-scheduler. Another BPEL developer said he had been experimenting with using Quartz to create BPEL instances and had a working example somewhere. This triggered me to start reading Quartz documentation. Of course, because I'm always optimistic, I first thought about possible issues such as the time required to configure initializing the scheduler at server start/deployment and the time it would require to implement this scheduler.

A Java programmer suggested using the open source Java scheduler Quartz for scheduling; http://terracotta.org/downloads/quartz-scheduler. Another BPEL developer said he had been experimenting with using Quartz to create BPEL instances and had a working example somewhere. This triggered me to start reading Quartz documentation. Of course, because I'm always optimistic, I first thought about possible issues such as the time required to configure initializing the scheduler at server start/deployment and the time it would require to implement this scheduler.

Then I found the following document; http://www.oracle.com/technetwork/middleware/soasuite/learnmore/soascheduler-186798.pdf and my problems were solved. The Weblogic server contains Quartz libraries which are already configured to be started at server startup saving me configuration time and the example was thorough enough to make implementation easy.

Nice to know; if you want to use the above mentioned example, don't use a 2.x version of Quartz unless you don't mind spending time to migrate the example Quartz 1.x code to 2.x (there are some major changes in the API) and on dealing with class version differences since Weblogic already has 1.x Quartz libraries.

Update

Release 11.1.1.6 of Oracle Fusion Middleware contains an Oracle Enterprise Scheduling Service

http://docs.oracle.com/cd/E23943_01/dev.1111/e24713/intro.htm#sthref10

This might be an even better alternative then using Quartz. I haven't had the time yet though to dive into this.

Even when using relative URL's in the SOAScheduler process, it is challenging to make this work in a clustered environment.

Update

Release 11.1.1.6 of Oracle Fusion Middleware contains an Oracle Enterprise Scheduling Service

http://docs.oracle.com/cd/E23943_01/dev.1111/e24713/intro.htm#sthref10

This might be an even better alternative then using Quartz. I haven't had the time yet though to dive into this.

Even when using relative URL's in the SOAScheduler process, it is challenging to make this work in a clustered environment.

Parallel execution using FlowN and variable scoping

The BPEL FlowN activity can be used for parallel execution of activities. Often this activity is used to split up a message into parts and process those parts simultaneously. When using FlowN it is advisable to put a scope inside and use local variables. If you neglect to do that, the results can be different from what you might expect.

To demonstrate this I created a database table and a simple BPEL process. The BPEL process inserts the counter variable of the FlowN activity into a table.

See the below screenshot for an overview of the process.

All variables in this process are global. I let JDeveloper generate a global variable for me when I created the invoke activity. The assign activity consists of the following;

- Assign an empty XML fragment to the variable used for the invoke

- Assign values to the fields of this variable. I used the counter in the FlowN (FlowN1_Variable_1) as one of the values.

When I check the database I see the following;

This is of course behavior to be expected since the assign activities are executed in parallel on a global variable, thus which value the global variable has during the invoke, is not solely determined by what happens in its own FlowN branche, but also by what happens in the other branches.

Fixing this is easy; create a new scope inside the FlowN activity and use local variables inside that scope. This makes the different FlowN branches independent of eachother.

See the last three results (id 4,5,6) below for the results after adding scopes and moving the invoke variable to this scope. The below screenshot also illustrates that the order of execution is not fixed when branches are executed in parallel.