Coming to this solution was a journey. I was looking for a Kubernetes installation which was easy to deploy and cleanup on my own laptop (I didn't want to have to pay for a hosted solution). I did want a solution which was more or less production like because I wanted to be able to play with storage solutions and deal with cluster challenges / loadbalancers. Things I couldn't do easily on environments like Minikube and Microk8s. Also, since I was running on a single piece of hardware, I needed a virtualization technology. Each one of them comes with their own challenges. On some of them it is difficult to get storage solutions to work, for example LXC/LXD (an alternative to Docker). Some of them come with networking challenges like Hyper-V and some of them just don't perform well like VirtualBox. I also needed a solution to provide some form of automation to create/destroy/access my virtual environments. A long list of requirements and this is what I ended up with. A production-like environment which is quick to create, destroy or reset, running on my laptop with easy management tools.

Charms

Charmed Kubernetes is a pure upstream distribution of Kubernetes by Canonical (you know, the people from Ubuntu). It can be deployed using so-called Charms with a tool called juju. Read more here. Charms consist of a definition of applications and their required resources. Using juju you can deploy charms to various environments such as AWS, Azure, OpenStack and GCP. You can also deploy locally to LXC/LXD containers or to a MaaS (Metal as a Service) solution. juju allows easy ssh access into nodes and several commands to manage the environment. In addition, it comes with a nice GUI.

Virtualization technology

During my first experiments I tried deploying locally to LXC/LXD. It looked nice at first since I was able to deploy an entire Kubernetes environment using very few commands and it was quick to start/stop/rebuild. When however I tried to do more complex things like deploying StorageOS, CephFS or OpenEBS, I failed miserably. After trying many things I found out I was mainly trying to get LXC/LXD do what I want. My focus wasn't getting to know LXC/LXD indebt but playing with Kubernetes. Since LXC/LXD isn't a full virtualization solution (it uses the same kernel as the host, similar to Docker) it was difficult to pull the required devices with the required privileges from the host into the LXC/LXD containers and from the containers into the containerd processes. This caused different issues with the storage solutions. I decided I needed a full virtualization solution which also virtualized the node OS, thus KVM or VirtualBox (I had no intention of running on Windows so dropped Hyper-V).

Deployment tooling

I already mentioned I wanted an easy way to manage the virtualized environments. I started out with Vagrant but the combination of getting a production-like environment out of the box using Vagrant scripts was hard to find. Also it would require me to define all the machines myself in Vagrant code. The Kubernetes distributions I would end up with were far from production like. They were automated mostly by developers who had similar requirements as my own but those solutions were not kept up to date by a big company and shared by many users. I wanted automation like juju but not LXC/LXD. What were my options without having to pay anything for it? MaaS, Metal as a Service, was something to try out. Juju could deploy to MaaS. I found that MaaS could be configured to create KVM machines on Juju's request! Nice, let's use it!

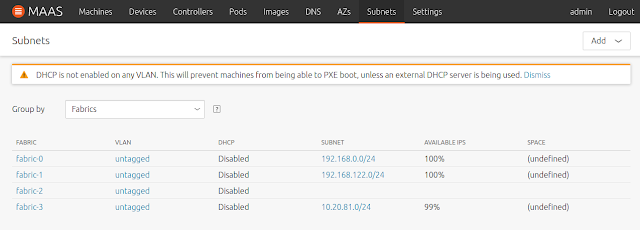

My main challenge was getting networking to work. MaaS can create virtual machines in KVM using virsh. The machines boot and then they have to be supplied with software to allow management and deployment of applications. MaaS does this by 'catching' machines on DHCP requests and then use PXE to provide the required software. This is all automated though. You only have to provide the networking part.

Bringing it all together

I started out with a clean Ubuntu 18.04 install (desktop, minimal GUI) on bare metal. I could also have chosen for 20.04 since that has also recently been released but I thought, let's not take any chances with the available tutorials by choosing a distribution which might contain changes which would make the tutorials fail. I think this can also be applied to 20.04 since it is not really that different compared to 18.04 but I haven't tried.

Some preparations

First I installed some packages required for the setup. I choose to use MaaS from packages instead of Snap since I couldn't get the connection to KVM to work with the Snap installation. The package installation created a user maas which was used to connect to the libvirt daemon to create VMs.

sudo apt-add-repository -yu ppa:maas/stable

sudo apt-get update

sudo apt-get -y install bridge-utils qemu-kvm qemu virt-manager net-tools openssh-server mlocate maas maas-region-controller maas-rack-controller

sudo apt-get update

sudo apt-get -y install bridge-utils qemu-kvm qemu virt-manager net-tools openssh-server mlocate maas maas-region-controller maas-rack-controller

Configure the network

Probably the most difficult step. Part of the magic is done by using the following netplan configuration:

sudo bash

cat << EOF > /etc/netplan/01-netcfg.yaml

network:

version: 2

renderer: NetworkManager

ethernets:

dummy:

dhcp4: false

bridges:

br0:

interfaces: [dummy]

dhcp4: false

addresses: [10.20.81.2/24]

gateway4: 10.20.81.254

nameservers:

addresses: [10.20.81.1]

parameters:

stp: false

EOF

netplan apply

exit

cat << EOF > /etc/netplan/01-netcfg.yaml

network:

version: 2

renderer: NetworkManager

ethernets:

dummy:

dhcp4: false

bridges:

br0:

interfaces: [dummy]

dhcp4: false

addresses: [10.20.81.2/24]

gateway4: 10.20.81.254

nameservers:

addresses: [10.20.81.1]

parameters:

stp: false

EOF

netplan apply

exit

This creates a bridge interface/subnet which allows the VMs to communicate with each other and with the host. The stp parameter is needed to allow PXE (the network boot solution MaaS uses) to work.

The below part creates an interface which is available in KVM for the VMs to use. It is a bridge interface to the subnet. The below commands replace the default KVM interface.

sudo virsh net-destroy default

sudo virsh net-undefine default

cat << EOF > maas.xml

<network>

<name>default</name>

<forward mode="bridge"/>

<bridge name="br0"/>

</network>

EOF

virsh net-define maas.xml

virsh net-autostart default

virsh net-start default

sudo virsh net-undefine default

cat << EOF > maas.xml

<network>

<name>default</name>

<forward mode="bridge"/>

<bridge name="br0"/>

</network>

EOF

virsh net-define maas.xml

virsh net-autostart default

virsh net-start default

Since we're at it, KVM also needs to have some storage defined:

virsh pool-define-as default dir - - - - "/var/lib/libvirt/images"

virsh pool-autostart default

virsh pool-start default

virsh pool-autostart default

virsh pool-start default

In order to allow the hosts on the subnet (your KVM virtual machines) to communicate with the internet, some iptable rules are required. In the below script replace enp4s0f1 with your interface which is connected to the internet (you can determine it from the ifconfig output)

sudo iptables -t nat -A POSTROUTING -o enp4s0f1 -j MASQUERADE

sudo iptables -A FORWARD -i enp4s0f1 -o br0 -m state --state RELATED,ESTABLISHED -j ACCEPT

sudo iptables -A FORWARD -i br0 -o enp4s0f1 -j ACCEPT

echo 'net.ipv4.ip_forward=1' | sudo tee -a /etc/sysctl.conf

echo 'net.ipv4.conf.br0.proxy_arp=1' | sudo tee -a /etc/sysctl.conf

echo 'net.ipv4.conf.br0.proxy_arp_pvlan' | sudo tee -a /etc/sysctl.conf

sudo iptables -A FORWARD -i enp4s0f1 -o br0 -m state --state RELATED,ESTABLISHED -j ACCEPT

sudo iptables -A FORWARD -i br0 -o enp4s0f1 -j ACCEPT

echo 'net.ipv4.ip_forward=1' | sudo tee -a /etc/sysctl.conf

echo 'net.ipv4.conf.br0.proxy_arp=1' | sudo tee -a /etc/sysctl.conf

echo 'net.ipv4.conf.br0.proxy_arp_pvlan' | sudo tee -a /etc/sysctl.conf

#For saving the iptables rules. After install the installer asks to save the current state

sudo apt-get install iptables-persistent

#If you want to save the state afterwards, do:

#sudo bash

#iptables-save > /etc/iptables/rules.v4

It is a good idea to do a reboot now to make sure the state of your network is reproducible. The sysctl code is executed at boot before the br0 bridge becomes available so after a reboot, you'll need to execute the below 3 lines. I do not have a solution for that yet. sudo sysctl -w net.ipv4.ip_forward=1

sudo sysctl -w net.ipv4.conf.br0.proxy_arp=1

sudo sysctl -w net.ipv4.conf.br0.proxy_arp_pvlan=1

sudo sysctl -w net.ipv4.conf.br0.proxy_arp=1

sudo sysctl -w net.ipv4.conf.br0.proxy_arp_pvlan=1

Also sometimes it helps to restart MAAS services such as:

sudo service maas-rackd restart

Maas

Next step is setting up Maas.

sudo maas init

This

will ask you for an admin user and you can also supply an SSH key.

After you've answered the questions, you can use the user you have

created to login to:

http://localhost:5240/MAAS

You need to enable DHCP for the subnet you've created (10.20.81.0/24)

Also the maas OS user needs to be able to manage KVM. Add the maas user to the libvirt group:

sudo usermod -a -G libvirt maas

Create a public and private key for the user maas and add the public key to the maas user s authorized keys so the private key can be used to login to the maas user:

sudo chsh -s /bin/bash maas

sudo su - maas

ssh-keygen -f ~/.ssh/id_rsa -N ''

cd .ssh

cat id_rsa.pub > authorized_keys

chmod 600 ~/.ssh/authorized_keys

sudo su - maas

ssh-keygen -f ~/.ssh/id_rsa -N ''

cd .ssh

cat id_rsa.pub > authorized_keys

chmod 600 ~/.ssh/authorized_keys

The public key needs to be registered in maas

Click on admin in the webinterface and add the SSH public key

Now maas needs to be configured to connect to KVM. Click pods and add the virsh URL: qemu+ssh://maas@127.0.0.1/system

You also installed virt-manager so you can manually create a VM and check it becomes available in MaaS (does the PXE boot and starts provisioning).

Juju

Now you have the network, KVM, MaaS configured and it's time to move to the next step. Configure Juju.

#Install juju

snap install juju --classic

#Add your MaaS cloud environment

juju add-cloud --local

Use the following API endpoint:http://10.20.81.2:5240/MAAS. Do not use localhost or 127.0.0.1! This IP is also accessible from hosts on your subnet and allows juju to manage the different hosts.

Add credentials (the MaaS user). First obtain an API key from the MaaS GUI.

juju add-credential maas

Bootstrap JuJu. This creates a controller environment. Juju issues its commands through this. If this step succeeds, installing Charmed Kubernetes will very likely also work. Should this fail, you can issue: juju kill-controller to start again. Do not forget to cleanup the VM in MaaS and KVM in such a case.

juju bootstrap maas maas-controller

Installing Charmed Kubernetes

A full installation of Charmed Kubernetes requires quite some resources. Most likely you don have them available on your laptop. Resources like 16 cores and more than 32Gb of memory.

Charms contain a description of the applications and the resources required to run them so hosts can automatically be provisioned to accomodate for those applications. You can override the resource requirements by using a so-called overlay.

For example, the original Charm looks like this: https://api.jujucharms.com/charmstore/v5/bundle/canonical-kubernetes-899/archive/bundle.yaml

I reduced the number of workers, the number of cores available to the workers and the amount of memory used by the individual master and the worker nodes so the entire thing would fit on my laptop.

cat << EOF > bundle.yaml

description: A highly-available, production-grade Kubernetes cluster.

series: bionic

applications:

kubernetes-master:

constraints: cores=1 mem=3G root-disk=16G

num_units: 2

kubernetes-worker:

constraints: cores=2 mem=6G root-disk=16G

num_units: 2

EOF

series: bionic

applications:

kubernetes-master:

constraints: cores=1 mem=3G root-disk=16G

num_units: 2

kubernetes-worker:

constraints: cores=2 mem=6G root-disk=16G

num_units: 2

EOF

Create a model to deploy Charmed Kubernetes in:

juju add-model k8s

Now execute the command which will take a while (~15 minutes on my laptop):

juju deploy charmed-kubernetes --overlay bundle.yaml --trust

This command provisions machines using MAAS and deploys Charmed Kubernetes on top.

Some housekeeping

In order to access the environment, you can do the following after the deployment has completed (you can check with juju status or juju gui)

sudo snap install kubectl --classic

sudo snap install helm --classic

mkdir .kube

juju scp kubernetes-master/0:config ~/.kube/config

juju scp kubernetes-master/0:config ~/.kube/config

The resulting environment

After juju is done deploying Charmed Kubernetes you will end up with an environment which consists of 8 hosts (+1 juju controller). Below is the output of juju status after the deployment has completed.

You can also browse the juju GUI. 'juju gui' gives you the URL and credentials required to login.

You can look in the MAAS UI to see how your cores and memory have been claimed

In virt-manager you can also see the different hosts

'kubectl get nodes' gives you the worker nodes.

You can enter a VM by for example 'juju ssh kubernetes-master/0'

If this does not work because provisioning failed or the controller is not available, you can also use the juju private key directly

ssh -i /home/maarten/.local/share/juju/ssh/juju_id_rsa ubuntu@10.20.81.1

You can execute commands against all nodes which provide an application:

juju run "uname -a" kubernetes-master

Of course you can access the dashboard which is installed by default:

kubectl proxy

Next access http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

You can use the .kube/config to login

Cleaning up

If you need to cleanup, the best way to remove Charmed Kubernetes is:

juju destroy-model k8s

This will remove the application and the machines so you can start over if you have destroyed your Kubernetes environment. Reinstalling it can be done with the below two commands:

juju add-model k8s

juju deploy charmed-kubernetes --overlay bundle.yaml --trust

If you want to go further back and even remove juju, I recommend the following procedure:

sudo snap remove juju --purge

sudo snap remove kubectl --purge

sudo snap remove helm --purge

rm -rf ~/.kube

rm -rf ~/.local

References

I've mainly used the following sites to put the pieces of the puzzle together

Hi, wonder if something like this could also be interesting for you:

ReplyDeletehttps://dev.to/project42/create-a-kubernetes-cluster-in-kvm-using-ansible-45gh

Is not as complex, but can be more complete once you get done with the basics