I'm a regular user of GitHub. Recently I discovered GitHub also has a build-in CI/CD workflow solution called GitHub Actions. Curious about how this would work I decided to try it out. I had previously build a Jenkins Pipeline to perform several static and dynamic application security tests on a Java project and decided to try and rebuild this pipeline using GitHub Actions. This blog described my first experiences and impressions.

First impressions

No external environment required

One of the immediate benefits I noticed was that the Jenkins Pipeline requires a Jenkins installation to be executed. GitHub Actions work on their own in your GitHub repository so no external environment is required. Do mind that GitHub Actions have some limitations. If you want to use them extensively, you will eventually probably need to pay.

Integrated with GitHub

GitHub Actions are of course specific to GitHub and integrate well with repository functionality. Because of this, you would probably only use them if your source code is in GitHub. There are quite a lot of options to have a workflow triggered. For example on a push or pull request, on a schedule or manually. Because GitHub Actions are integrated with GitHub and linked to your account, you will get automatic mails when a workflow succeeds or fails.

If you want to integrate Jenkins with GitHub, the communication is usually done via webhooks. This requires you to expose your Jenkins environment in a way GitHub can reach it. This can be challenging to do securely (might require you to punch holes in your firewall or use a polling mechanism). Also if you want to be informed of the results of a build by e-mail, you need to define mail server settings/credentials in Jenkins. For GitHub Actions this is not required.

Reporting capabilities

My Jenkins / SonarQube environment provide reporting capabilities. At the end of a build you can find various reports back in Jenkins and issues are created in SonarQube. In SonarQube you can define quality gates to let the build fail or not.

Using GitHub Actions, this works differently. Letting a build fail is something to explicitly script instead of configured using a GUI. At the end of a build you can export artifacts. These can contain reports but you need to open them yourself. Another option is to publish them to GitHub Pages (see here). Thus no 'out of the box' capability to do reporting for GitHub Actions.

GitHub has so-called "Issues" but these appear not to be meant to register individual findings of the different static code analysis tools. They work better to just register a single issue for a failed build. Of course, there are GitHub Actions available for that.

Extensibility

To be honest, I did not dive into how to create custom pipeline steps in my Jenkins environment. I noticed you can code some Groovy and integrate that with a Pipeline but it would probably require quite a time investment to obtain the required knowledge to get something working. For GitHub Actions, creating your own Actions is pretty straightforward and described here. You create a repository for your Action, a Dockerfile and a yaml describing the inputs and outputs of the Action. Next you can directly use it in your GitHub workflows. As the Dutch would say; 'een kind kan de was doen' (literally: 'a child can do laundry'. It is a saying which means: 'it's child's play')

Challenges rebuilding a Jenkins Pipeline

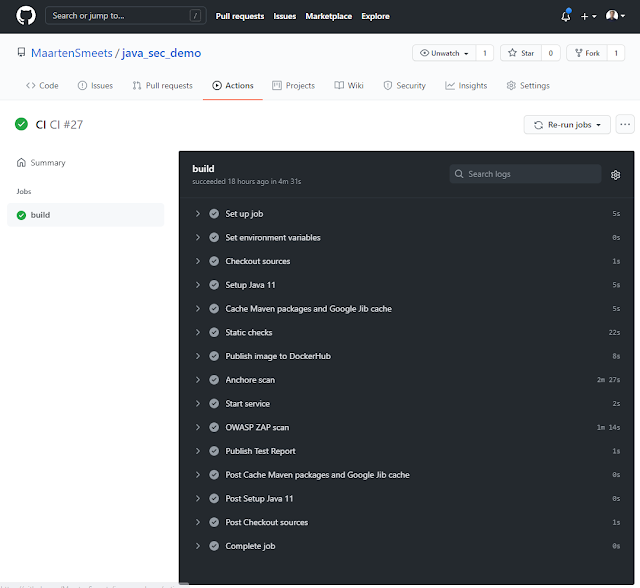

I had created a simple Jenkins Pipeline to compile Java code, perform static code analysis, create a container, deploy it to Docker Hub, scan the container using Anchore Engine, perform an automated penetration test using OWASP ZAP, feed the results to SonarQube and check a Quality Gate.

The main challenges I had with rebuilding the pipeline were working with containers during the build process and how to deal with caching.

Starting and stopping containers

The main challenges I had when building the Jenkins Pipeline was that Jenkins was running inside a container and I wanted to spawn new containers for Anchore, ZAP and my application. Eventually I decided to use docker-compose to start everything upfront and do a manual restart of my application after the container image in Docker Hub was updated. This challenge is similar when running Jenkins on K8s.

Using GitHub Actions, the environment is different. You choose a so-called runner for the build. This can be a self-hosted environment but can also be GitHub hosted. GitHub offers various flavors of runners. In essence, this gives you for example a Linux VM on which you can run stuff, start and stop containers and more or less do whatever you want to perform your build. The Jenkins challenge of starting and stopping containers from within a container is just not there!

For GitHub Actions, you should be aware there are various ways to deal with containers. You can define service containers which will start when your workflow starts. You can also execute certain actions in a container you specify with the 'uses' keyword. When these do not suffice, you can call docker directly yourself from a run command or by calling an external script. I chose the last option in my workflow. See my script here for starting the OWASP ZAP scan in a container.

Caching artifacts

To reduce build time, you can cache artifacts such as Maven dependencies but also of container images. For the container images you can think of images cached by Google Jib or images cached by the Docker daemon. I tried caching images from the Docker daemon but that increased my build time. Most likely because of how the cache worked. Every image which was saved in the cache and later restored, included all underlying layers. Probably certain layers are part of multiple images so they are saved multiple times. It appeared downloading only the required layers was quicker than caching them and restoring them on a next build. If you are however more worried about network bandwidth than storage, you can of course still implement this. For my project, caching the Maven and Jib artifacts caused my build time to go from 7m 29s to 4m 45s so this appeared quite effective. Probably because artifacts are only downloaded once (no doubles) and there are many small artifacts which all require creating a connection to a Maven repository. Applying a saved repository was quicker than downloading in this case.

Setting environment variables

Setting environment variables in GitHub Actions was not so much of a challenge but in my opinion, the method I used felt a bit peculiar. For pushing an image from my Maven build to Docker Hub, I needed Docker Hub credentials. Probably I should have used a Docker Hub access token instead for added security but I didn't.

I needed to register my Docker Hub credentials in GitHub as secrets. You can do this by registering repository secrets and refer to them in your GitHub Actions workflow using something like: ${{ secrets.DOCKER_USERNAME }} .

From these secrets, I created environment variables. I did this like; steps:

- name: Set environment variables

run: |

echo "DOCKER_USERNAME=${{ secrets.DOCKER_USERNAME }}" >> $GITHUB_ENV

echo "DOCKER_PASSWORD=${{ secrets.DOCKER_PASSWORD }}" >> $GITHUB_ENV I could also have specified the environment at the step level or at the workflow level using the env keyword like;

env: # Set environment variables

DOCKER_USERNAME: ${{ secrets.DOCKER_USERNAME }}

DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }} The first option is very flexible since you can use any bash statement to fill variables. The second option however is more readable.

Getting started with GitHub Actions

Creating GitHub Actions is relatively simple. You create a file called .github/workflows/main.yml in your GitHub repository. You can use a provided starters from GitHub specific to a language or create one from scratch for yourself.

When you have created such a file, you can go to the Actions tab in GitHub after you have logged in; name: CI

# Controls when the action will run.

on:

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

# A workflow run is made up of one or more jobs that can run sequentially or in parallel

jobs:

# This workflow contains a single job called "build"

build:

# The type of runner that the job will run on

runs-on: ubuntu-20.04

# Steps represent a sequence of tasks that will be executed as part of the job

steps:

- name: Set environment variables

run: |

echo "DOCKER_USERNAME=${{ secrets.DOCKER_USERNAME }}" >> $GITHUB_ENV

echo "DOCKER_PASSWORD=${{ secrets.DOCKER_PASSWORD }}" >> $GITHUB_ENV

# Checks-out your repository under $GITHUB_WORKSPACE, so your job can access it

- name: Checkout sources

uses: actions/checkout@v2

# Runs a single command using the runners shell

- name: Setup Java 11

uses: actions/setup-java@v1

with:

java-version: 11

#- name: Cache Docker images

# uses: satackey/action-docker-layer-caching@v0.0.11

# # Ignore the failure of a step and avoid terminating the job.

# continue-on-error: true

- name: Cache Maven packages and Google Jib cache

uses: actions/cache@v2

with:

path: |

~/.m2

~/.cache/google-cloud-tools-java/jib

key: ${{ runner.os }}-m2-${{ hashFiles('**/pom.xml') }}

restore-keys: ${{ runner.os }}-m2

- name: Static checks

run: mvn --batch-mode --update-snapshots dependency-check:check pmd:pmd pmd:cpd spotbugs:spotbugs

- name: Publish image to Docker Hub

run: mvn --batch-mode --update-snapshots compile jib:build

- name: Anchore scan

uses: anchore/scan-action@v1

with:

image-reference: "docker.io/maartensmeets/spring-boot-demo"

fail-build: true

- name: Start service

run: |

docker network create zap

docker run --pull always --name spring-boot-demo --network zap -d -p 8080:8080 docker.io/maartensmeets/spring-boot-demo

- name: OWASP ZAP scan

run: |

# make file runnable, might not be necessary

chmod +x "${GITHUB_WORKSPACE}/.github/zap_cli_scan.sh"

# run script

"${GITHUB_WORKSPACE}/.github/zap_cli_scan.sh"

mv owaspreport.html target

- name: 'Publish Test Report'

if: always()

uses: actions/upload-artifact@v2

with:

name: 'test-reports'

path: |

target/*.html

target/site/

./anchore-reports/

No comments:

Post a Comment