The OWASP top 10 has listed the following vulnerability for several years (at least in 2013 and 2017): using components with known vulnerabilities. But software nowadays can be quite complex consisting of many dependencies. How do you know the components and versions of those components do not contain known vulnerabilities? Luckily the OWASP foundation has also provided a dependency-check tool with plugins for various languages to make detecting this more easy. In this blog post I'll show how you can incorporate this in a Jenkins pipeline running on Kubernetes and using Jenkens and SonarQube to display the results of the scan.

Articles containing tips, tricks and nice to knows related to IT stuff I find interesting. Also serves as online memory.

Thursday, October 15, 2020

Sunday, October 4, 2020

Surprisingly easy: Container vulnerability scanning in a Jenkins pipeline running on Kubernetes using Anchore Engine

Anchore Engine is a popular open source tool for container image inspection and vulnerability scanning. It is easily integrated in a Kubernetes environment as an admission controller or in a Jenkins build pipeline using a plugin. A while ago I took a look at Anchore Engine and created a small introductory presentation and Katacoda scenario for it. The Katacoda scenario allows you to try out Anchore Engine without having to setup your own container environment. In this blog I'll go a step further and illustrate how you can incorporate an Anchore Engine container scan inside your Java build pipeline which I illustrated here. Anchore Engine is deployed to Kubernetes, configured in Jenkins (which also runs on Kubernetes) and incorporated in a Jenkins Pipeline during a build process. Only if the container has been deemed secure by the configured Anchore Engine policy, is it allowed to be deployed to Kubernetes. I will also show how to update policies using the CLI.

Wednesday, September 23, 2020

Kubernetes: Building and deploying a Java service with Jenkins

Kubernetes has become the de facto container orchestration platform to run applications on. Java applications are no exception to this. When using a PaaS provider to give you a hosted Kubernetes, sometimes that provider also provides a CI/CD solution. However this is not always the case. When hosting Kubernetes yourself, you also need to implement a CI/CD solution.

Jenkins is a popular tool to use when implementing CI/CD solutions. Jenkins can also run quite easily in a Kubernetes environment. When you have Jenkins installed, you need to have a Git repository to deploy your code from, a Jenkins pipeline definition, tools to wrap your Java application in a container, a container registry to deploy your container to and some files to describe how the container should be deployed and run on Kubernetes. In this blog post I'll describe a simple end-to-end solution to deploy a Java service to Kubernetes. This is a minimal example so there is much room for improvement. It is meant to get you started quickly.

Friday, July 31, 2020

OpenEBS: cStor storage engine on KVM

Thursday, July 30, 2020

Production ready Kubernetes on your laptop. Kubespray on KVM

Monday, July 27, 2020

Scanning container images for vulnerabilities using Anchore Engine

Thursday, June 25, 2020

OBS Studio + Snap Camera: Putting yourself in your presentation live for free!

Friday, May 22, 2020

OpenEBS: Create persistent storage in your Charmed Kubernetes cluster

Thursday, May 21, 2020

StorageOS: Create persistent storage in your Charmed Kubernetes cluster

Wednesday, May 20, 2020

Charmed Kubernetes on KVM using MAAS and juju

Thursday, April 30, 2020

Quick and easy: A multi-node Kubernetes cluster on CentOS 7 + QEMU/KVM (libvirt)

There are several options to run Kubernetes locally to get some experience with Kubernetes as developer. For example Minikube, MicroK8s and MiniShift. These options however are not representative for a real environment. They for example usually do not have master and slave nodes. Running locally requires quite different configuration compared to running multiple nodes in VMs. Think for example about how to deal with storage and a container registry which you want to share over the different nodes. Installing a full blown environment requires a lot of work and resources. Using a cloud service usually is not free and you usually have less to no control over the environment Kubernetes is running in.

In this blog I'll describe a 'middle way'. Get an easy to manage small multi node Kubernetes environment running in different VMs. You can use this environment for example to learn what the challenges of clusters are and how to deal with them efficiently.

It uses the work done here with some minor additions to get a dashboard ready.

Wednesday, April 8, 2020

Spring: Blocking vs non-blocking: R2DBC vs JDBC and WebFlux vs Web MVC

Tuesday, March 31, 2020

The size of Docker images containing OpenJDK 11.0.6

Sunday, February 23, 2020

Secure browsing using a local SOCKS proxy server (on desktop or mobile) and an always free OCI compute instance as SSH server

An SSH server in combination with a locally running SOCKS proxy server allows you to browse the internet more securely from for example public Wifi hotspots by routing your internet traffic through a secure channel via a remote server. If you combine this with DNS over HTTPS, which is currently at least available in Firefox and Chrome, it will be more difficult for other parties to analyse your traffic. Also it allows you to access resources from a server outside of a company network which can have benefits for example if you want to check how a company hosted service looks to a customer from the outside. Having a server in a different country as a proxy can also have benefits if certain services are only available from a certain country (a similar benefit as using a VPN or using Tor) or as a means to circumvent censorship.

Do check what is allowed in your company, by your ISP and is legal within your country before using such techniques though. I of course don't want you to do anything illegal and blame me for it ;)

Saturday, February 1, 2020

HTTP benchmarking using wrk. Parsing output to CSV or JSON using Python

Parsing the wrk output is a challenge. It would be nice to have a feature to output the results in the same units as CSV or JSON file. More people asked this question and the answer was: do some LuaJIT scripting to achieve that. Since I'm no Lua expert and to be honest, I don't have any people in my vicinity that are, I decided to parse the output using Python (my favorite language for data processing and visualization) and provide you with the code so you don't have to repeat this exercise.

You can see example Python code of this here.

Thursday, January 2, 2020

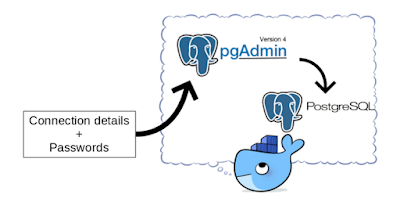

pgAdmin in Docker: Provisioning connections and passwords

The pgAdmin image provided on Docker Hub does not contain any server connection details. When your pgAdmin container changes regularly (think about changes to database connection details and keeping pgAdmin up to date), you might not want to enter the connections and passwords manually every time. This is especially true if you use a single pgAdmin instance to connect to many databases. A manual step also prevents a fully automated build process for the pgAdmin container.

You can export/import connection information, but you cannot export passwords. It is a bother, especially in development environments where the security aspect is less important, to lookup passwords every time you need them. How to fix this and make your life a little bit easier?

In this blog I'll show how to create a simple script to automate creating connections and supply password information so the pgAdmin instance is ready for use when you login to the console for the first time! This consists of provisioning the connections and provisioning the password files. You can find the files here.